Bird API written in Golang [Code to Containerization, CI/CD Pipelines, Deployed AWS Infra with Kubernetes and Monitoring ]

Step 0: System Design and How the API interact

Source Code :

https://github.com/Gatete-Bruno/lifinance-devops-challenge?tab=readme-ov-file

Overview of the Source Code:

K8s-Bootstrapp: Contains various YAML files and scripts for bootstrapping and managing the Kubernetes cluster, including installation, node setup, updates, and SSH management. The structure is well-labeled, making it easy to understand each script’s purpose.

bird and birdImage: Each directory is dedicated to an API (bird and birdImage). They include Dockerfiles for containerization, Makefiles for build automation, Go modules (

go.mod), and main application files. The separation of these two APIs into distinct folders makes it easier to manage them independently.bird-chart: Contains Helm chart files for deploying the bird API. With distinct templates (

deployment.yaml,service.yaml) and values (values.yaml), it helps streamline Kubernetes resource management.k8s-manifests: Manages Kubernetes deployment manifests for both APIs (

bird-api-deployment.yamlandbird-image-deployment.yaml). Keeping this separate from the Helm chart helps differentiate between Helm-based deployments and manual Kubernetes manifests.observability: Focuses on monitoring and storage, including Longhorn storage, Prometheus, and service monitors. It’s good that observability is handled separately.

terraform: Contains your Infrastructure as Code (IaC) for managing AWS resources (IAM, EC2 instances, VPC). Keeping it isolated helps with infrastructure management

How the Bird API works:

1. Bird API (bird/main.go)

This part of the application is responsible for providing information about different birds.

When a user sends a request to the Bird API, the system randomly selects a bird fact (name and description) from a third-party API:

https://freetestapi.com/api/v1/birds.The Bird API does two things:

Gets a bird fact: It asks the third-party API for a random bird's name and description (like "Eagle" or "Parrot").

Gets an image: It uses the bird's name to ask another API (the Bird Image API) to return an image related to that bird. If the image can't be found, a default "missing bird" image is shown.

After gathering both the bird's name, description, and image, it combines them into a response and sends it back to the user.

Example:

You ask the API for bird info, and it might return:

{

"name": "Eagle",

"description": "A powerful bird of prey with sharp vision.",

"image": "http://link-to-eagle-image.com"

}

If something goes wrong (like if the API can’t find the bird or image), it will send back a funny default bird with this image:https://www.pokemonmillennium.net/wp-content/uploads/2015/11/missingno.png.

2. Bird Image API (birdImage/main.go)

This part of the application is responsible for finding and sending an image for a specific bird.

When the Bird API asks for an image, the Bird Image API searches for it on Unsplash, a photo-sharing website.

- It sends a request to Unsplash using the bird’s name (e.g., "Eagle") and asks Unsplash for a photo related to that bird.

The Bird Image API then processes the Unsplash response and extracts the URL of the image.

If it finds the bird image, it sends the image URL back to the Bird API.

If it can't find the bird image or there's an error, it sends back a default image (the same "missing bird" image).

Example:

The Bird Image API searches for an "Eagle" image on Unsplash and returns the URL to that image. If it can't find one, it returns the default "missing bird" image.

System Design :

1. GitHub (Source Control)

Purpose: Stores the codebase for the Bird API. It acts as the central repository where all changes, pull requests, and branches are managed.

Key Technology: GitHub

2. GitHub Actions (CI/CD Pipeline)

Purpose: Automates the process of building, testing, and deploying the Bird API. GitHub Actions is triggered by changes in the GitHub repository (e.g., push or pull request).

Key Steps:

Build: Docker images are built for both the

birdandbirdImageAPIs.Test: Runs unit and integration tests to verify the code quality.

Deploy: Once tests pass, the Docker image is pushed to DockerHub and later deployed to Kubernetes.

Key Technology: GitHub Actions

3. Docker (Containerization)

Purpose: Packages the Bird API and Bird Image API into Docker containers, ensuring consistency across different environments (development, testing, and production).

Key Technology: Docker

4. Helm (Kubernetes Package Manager)

Purpose: Automates the deployment and management of Kubernetes applications. Helm packages (charts) are used to define, install, and upgrade Kubernetes deployments, including the Bird API.

Key Technology: Helm

5. Kubernetes (Container Orchestration)

Purpose: Manages the containerized applications in a production-grade environment. It ensures high availability, scalability, and fault tolerance for the Bird API and Bird Image API.

Key Components:

Pods: The smallest unit in Kubernetes that runs the Bird API containers.

Service (SVC): Load balances traffic across the Pods to ensure seamless access to the API.

Key Technology: Kubernetes

6. Terraform (Infrastructure as Code)

Purpose: Provisions and manages AWS infrastructure required to run the Kubernetes cluster. Terraform defines resources such as VPCs, subnets, security groups, and EC2 instances.

Key Technology: Terraform

7. AWS (Cloud Infrastructure)

Purpose: AWS is the cloud provider where the Kubernetes cluster is deployed. It provides the virtual machines (EC2 instances) and networking infrastructure (VPC, subnets) required to run the applications.

Key AWS Services:

EC2: Virtual machines where the Kubernetes nodes run.

VPC: Virtual network for the infrastructure.

Subnets and Security Groups: Manage the networking and security of the EC2 instances.

Key Technology: AWS (EC2, VPC, Security Groups)

8. Ansible (Configuration Management)

Purpose: Ansible automates configuration management tasks, such as provisioning and managing EC2 instances. It ensures that the instances are properly configured to run Kubernetes.

Key Technology: Ansible

9. Kubernetes Cluster (On AWS)

Purpose: The Kubernetes cluster is deployed on EC2 instances within AWS. This cluster orchestrates the running of the Bird API and Bird Image API.

Key Components:

Master Node: Manages the Kubernetes control plane (API server, scheduler, controller manager).

Worker Nodes: Run the application Pods (Bird API and Bird Image API).

Service: Exposes the Pods and ensures that traffic is routed correctly to the applications.

10. Pods and Services (Running the Bird API)

Purpose: Each Pod contains one or more containers running the Bird API or Bird Image API. Kubernetes manages the lifecycle and scaling of these Pods.

Key Technology: Pods (within Kubernetes)

Step 1: Run the App Locally:

The first important step is to change this file so that the service where the api is exposed is able to receive the endpoint from the Bird APi

how to change it, go to the directory and edit the file main.go

bruno@Batman-2 lifinance-devops-challenge % cd bird/

bruno@Batman-2 bird % ls

Dockerfile Makefile getBird go.mod main.go

bruno@Batman-2 bird % vi main.go

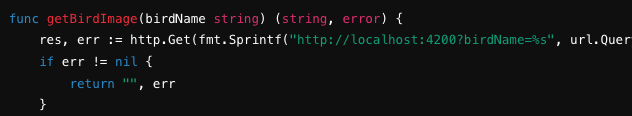

change this line in the file to this , possibly line 27

res, err := http.Get(fmt.Sprintf("http://localhost:4200?birdName=%s", url.QueryEscape(birdName)))

To run the birdImage API locally, follow these steps:

1. Build the API

Since the Makefile provides a target to build the API, you can use the following command to compile the code:

make birdImage

2. Run the built executable

After building, you will have an executable named getBirdImage. Run the executable with:

./getBirdImage

This will start the server on port 4200 as specified in the main.go file:

http.ListenAndServe(":4200", nil)

3. Test the API

You can now test the API by sending a request to it. Use curl to make a request:

Without specifying a bird name (this will return the default image):

curl "http://localhost:4200/"

o run the bird API, follow these steps, similar to what we did for the birdImage API:

1. Build the API

Run the following command to build the bird API using the Makefile:

make bird

This will generate an executable named getBird.

2. Run the built executable

Once the build is complete, run the executable with:

./getBird

This will start the server on port 4201, as specified in the main.go file:

http.ListenAndServe(":4201", nil)

3. Test the API

To test the API, use curl to make a request to the bird API:

curl "http://localhost:4201/"

This request should return a random bird fact and an image. The getBirdImage function in your code sends a request to the birdImage API (which is running on localhost:4200) to retrieve an image for the bird.

- Ensure that the

birdImageAPI is already running onlocalhost:4200before running thebirdAPI, as thebirdAPI fetches bird images from it.

Step 2: Containerize the App Using Docker:

Same thing as in step 1 is to change this file so that the service where docker is exposed is able to receive the endpoint from the Bird APi

how to change it, go to the directory and edit the file main.go

bruno@Batman-2 lifinance-devops-challenge % cd bird/

bruno@Batman-2 bird % ls

Dockerfile Makefile getBird go.mod main.go

bruno@Batman-2 bird % vi main.go

change this line in the file to this , possibly line 27

res, err := http.Get(fmt.Sprintf("http://localhost:4200?birdName=%s", url.QueryEscape(birdName)))

To Dockerize both the Bird API and Bird Image API, you'll need to create a Dockerfile for each API in their respective directories. Here's how you can structure the Dockerfiles.

for bird dir

# syntax=docker/dockerfile:1

FROM golang:1.22-alpine AS builder

# Set the working directory

WORKDIR /app

# Copy go.mod and go.sum files

COPY go.mod ./

# Download dependencies

RUN go mod download

# Copy the rest of the application code

COPY . .

# Build the bird API

RUN go build -o bird-api .

# Use the latest minimal Alpine image for the final deployment

FROM alpine:latest

WORKDIR /root/

COPY --from=builder /app/bird-api .

CMD ["./bird-api"]

EXPOSE 4201

for birdImage dir

# syntax=docker/dockerfile:1

FROM golang:1.22-alpine AS builder

# Set the working directory

WORKDIR /app

# Copy go.mod and go.sum files

COPY go.mod ./

# Download dependencies

RUN go mod download

# Copy the rest of the application code

COPY . .

# Build the birdImage API

RUN go build -o bird-image-api .

# Use the latest minimal Alpine image for the final deployment

FROM alpine:latest

WORKDIR /root/

COPY --from=builder /app/bird-image-api .

CMD ["./bird-image-api"]

EXPOSE 4200

In this case, Most importnantly for the containers to communicate, they need to be on the same docker network , so this is what we do :

# Create a network (only once)

docker network create bird-net

# Run both containers on the network

docker run -d --name bird-image-api --network bird-net -p 4200:4200 bruno74t/bird-image-api

docker run -d --name bird-api --network bird-net -p 4201:4201 bruno74t/bird-api

Be mindfull that i have arleady logged into my docker hub for container registry .

Output

Step 3: Ci/CD Pipeline using Github Actions

To setup Ci/CD pipline, we will be using github actions, in this case :

bruno@Batman-2 workflows % ls

docker-ci.yml

bruno@Batman-2 workflows %

bruno@Batman-2 workflows % cat docker-ci.yml

name: CI/CD for Bird APIs

on:

push:

branches:

- main

pull_request:

branches:

- main

jobs:

build:

runs-on: ubuntu-latest

services:

docker:

image: docker:20.10.7

options: --privileged

ports:

- 2375:2375

env:

DOCKER_HOST: tcp://localhost:2375

steps:

# Step 1: Checkout the code

- name: Checkout Code

uses: actions/checkout@v2

# Step 2: Set up Docker Buildx

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v2

# Step 3: Log into Docker Hub

- name: Log into Docker Hub

run: echo "${{ secrets.DOCKER_PASSWORD_SYMBOLS_ALLOWED }}" | docker login --username "${{ secrets.DOCKER_USERNAME }}" --password-stdin

# Step 4: Build and push bird API image

- name: Build and Push Bird API Docker Image

run: |

docker build -t ${{ secrets.DOCKER_USERNAME }}/bird-api:v.1.0.2 ./bird

docker push ${{ secrets.DOCKER_USERNAME }}/bird-api:v.1.0.2

# Step 5: Build and push birdImage API image

- name: Build and Push BirdImage API Docker Image

run: |

docker build -t ${{ secrets.DOCKER_USERNAME }}/bird-image-api:v.1.0.2 ./birdImage

docker push ${{ secrets.DOCKER_USERNAME }}/bird-image-api:v.1.0.2

bruno@Batman-2 workflows %

Once the Source Code is pushed to Github build process will start and the image will be pushed to my docker hub registrty

Step 4: Build AWS infrastructure

our working directory is terraform for this activity

bruno@Batman-2 terraform % tree

.

├── iam-output.tf

├── instanct.tf

├── terraform.tfstate

├── terraform.tfstate.backup

└── vpc.tf

1 directory, 5 files

bruno@Batman-2 terraform %

The Source Code is on my Github repo

https://github.com/Gatete-Bruno/lifinance-devops-challenge/tree/main/terraform

Requirments:

AWS account

An AWS user account with elevated privileges to setup these resources

SSH Key to access the EC2 instances

AWS CLI configured using AWS configure

Terraform installed on the Local machine

terraform init

terraform validate

terraform plan

terraform apply

Once you type in yes resources will be provisioned !

These are some of the resources provisioned by terraform leveraging Infrastructure as Code.

Step 5: Bootsrap Kubernetes Cluster. Using Kubekey

I am using ansible to automate the provisioning of the Cluster :

Requirements:

SSH keys for the local machine established on the EC2 instances

public key for the EC2 instances installed on each so that they can communicate together

To test the connectivity for the local machine and the EC2 Instances we go ahead to use a ping.yaml file

Then after we will edit a few important files ,

bruno@Batman-2 Kubeky-Bootstrapping-Ansible % cat config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master1, address: 54.91.229.69, internalAddress: 10.0.1.151, user: kato}

- {name: node1, address: 3.91.27.241, internalAddress: 10.0.1.5, user: kato}

- {name: node2, address: 3.88.157.155, internalAddress: 10.0.1.196, user: kato}

roleGroups:

etcd:

- master1

control-plane:

- master1

worker:

- node1

- node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.23.10

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

bruno@Batman-2 Kubeky-Bootstrapping-Ansible %

I added the IP addresses and the user for authentication purposes

Once that is done, we will run this file that contains all imported playbooks

bruno@Batman-2 Kubeky-Bootstrapping-Ansible % cat setup-kubernetes-cluster.yaml

---

# - name: Add all nodes to known hosts

# import_playbook: 00-add-to-ssh-known-hosts.yaml

- name: Update nodes

import_playbook: 01-update-servers.yaml

- name: Install required modules

import_playbook: 02-install-requirements.yaml

- name: Disable swap on all nodes

import_playbook: 03-disable-swap.yaml

- name: Download & setup kubekey

import_playbook: 04-download-kubekey.yaml

- name: Install k8

import_playbook: 05-install-kubernetes.yaml

bruno@Batman-2 Kubeky-Bootstrapping-Ansible %

On completion, the cluster should be up and running

Step 6: Deploy Containerized Application

Two Approaches:

- Build Kubernetes Manifest Files

https://github.com/Gatete-Bruno/lifinance-devops-challenge/tree/main/k8s-manifests

Approach 2:

Using Helm to Package the application:

https://github.com/Gatete-Bruno/lifinance-devops-challenge/tree/main/bird-chart

I opted for option two, because it is easier to package the application and make changes :

Install Helm

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

Initialize a Helm Chart

helm create bird-chart

Customize the chart dir and edit the files

bruno@Batman-2 bird-chart % tree

.

├── Chart.yaml

├── charts

├── templates

│ ├── _helpers.tpl

│ ├── deployment.yaml

│ └── service.yaml

└── values.yaml

3 directories, 5 files

bruno@Batman-2 bird-chart %

Package and Install the Helm Chart

# Package the chart

helm package bird-chart

# Install the chart in your Kubernetes cluster

helm install bird-api-release bird-chart/

Verify the Installation

After the Helm chart is installed, you can verify the resources:

[root@master1 lifinance-devops-challenge]# ls

README.md bird bird-chart bird-chart-0.1.0.tgz birdImage k8s-manifests observability terraform

[root@master1 lifinance-devops-challenge]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/bird-api-release-bird-api-549bc4d-f75v8 1/1 Running 0 14h

pod/bird-api-release-bird-api-549bc4d-t6gl2 1/1 Running 0 14h

pod/bird-api-release-bird-image-api-797b664f65-jpl9s 1/1 Running 0 14h

pod/bird-api-release-bird-image-api-797b664f65-nkzsr 1/1 Running 0 14h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/bird-api-release-bird-api-service NodePort 10.233.48.70 <none> 80:32493/TCP 14h

service/bird-api-release-bird-image-api-service NodePort 10.233.14.223 <none> 80:31708/TCP 14h

service/kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 16h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/bird-api-release-bird-api 2/2 2 2 14h

deployment.apps/bird-api-release-bird-image-api 2/2 2 2 14h

NAME DESIRED CURRENT READY AGE

replicaset.apps/bird-api-release-bird-api-549bc4d 2 2 2 14h

replicaset.apps/bird-api-release-bird-image-api-797b664f65 2 2 2 14h

[root@master1 lifinance-devops-challenge]#

Since i used NodePort , i have access to the application via the port

Step 7: Observability for the Two API

We will need to install promtheus and grafana, using Helm , but before we begin , we will install Longhorn, why, to hand our pvc and pv

how to install Longhorn using Helm

What Longhorn Does

Longhorn is a cloud-native distributed block storage system for Kubernetes. It provides persistent storage for your applications running in Kubernetes clusters and can dynamically provision storage volumes to meet the needs of your PVCs.

Steps to Use Longhorn to Solve the Issue

Install Longhorn

If you haven’t already installed Longhorn, you can install it using Helm:

helm repo add longhorn https://charts.longhorn.io helm repo update helm install longhorn longhorn/longhorn --namespace longhorn-system --create-namespaceConfigure Longhorn

After installation, access the Longhorn UI to configure storage settings. You can typically access it via a LoadBalancer or NodePort service exposed in the

longhorn-systemnamespace.kubectl get svc -n longhorn-systemLook for the Longhorn UI service and access it using the provided external IP or NodePort.

Create and Attach Storage Classes

Define a storage class for Longhorn if it hasn’t been created by default. Here’s an example:

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: longhorn provisioner: driver.longhorn.io volumeBindingMode: WaitForFirstConsumerApply the storage class:

kubectl apply -f longhorn-storage-class.yaml

Reinstall Prometheus and Grafana

If you are using Helm, you can easily reinstall Prometheus and Grafana using the Prometheus and Grafana Helm charts.

- Reinstall Prometheus:

helm install prometheus prometheus-community/kube-prometheus-stack -n monitoring

- Reinstall Grafana:

helm install grafana grafana/grafana -n monitoring

Ensure that the correct Helm repositories are added:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo add grafana https://grafana.github.io/helm-charts

helm repo update

Step 3: Verify the installation

You can check the status of the newly installed components:

kubectl get all -n monitoring

with a few metrics we can have a virtualization :

Step 8: Scaling the Bird-API App

These two options we can consider here:

Update the

values.yamlfor scaling (Since we are using HELM)Horizontal Pod Autoscaler (HPA)

Option 1: Update Values file

To scale your application running on Kubernetes seamlessly, you can leverage Helm to update the number of replicas for each deployment. Since you're using Helm, the scaling process can be done by updating the values.yaml file and then redeploying the Helm chart.

Here's a step-by-step guide to scaling your application:

1. Update the values.yaml for scaling

In your values.yaml, you already have the replicas set for both birdApi and birdImageApi. To scale, simply increase the replica count for each:

birdApi:

image: "bruno74t/bird-api:v.1.0.2"

replicas: 4 # Increased from 2 to 4

nodePort: 32493

birdImageApi:

image: "bruno74t/bird-image-api:v.1.0.2"

replicas: 4 # Increased from 2 to 4

nodePort: 31708

You can adjust the number of replicas to suit your desired scale.

2. Apply the updated Helm chart

After modifying the values.yaml file, you can redeploy the Helm chart with the updated replica counts.

helm upgrade bird-api-release ./bird-chart

This command will update your Helm release with the new configuration and scale your application to the desired number of replicas.

3. Verify the scaling

After the Helm chart is upgraded, verify that the scaling has taken place by checking the status of your pods and deployments:

kubectl get deployments

kubectl get pods

You should see the increased number of pods for both birdApi and birdImageApi reflecting the new replica counts.

Option 2: HPA

The Horizontal Pod Autoscaler (HPA) in Kubernetes is a feature that automatically adjusts the number of replicas of a deployment, replication controller, or stateful set based on observed CPU, memory, or custom metrics. This provides an automated way to scale applications based on the current workload, ensuring that your application can handle increased traffic or reduced traffic without manual intervention.

Here's an in-depth explanation of how the HPA works and how you can implement it in your Kubernetes cluster.

1. How HPA Works

The Horizontal Pod Autoscaler controller periodically checks the resource usage (like CPU or memory) of the pods in a deployment and adjusts the number of running replicas to match the desired performance goals. The HPA can scale up (increase the number of replicas) or scale down (reduce the number of replicas) based on the configured thresholds.

HPA relies on Kubernetes Metrics API, which collects resource usage data from the running pods. You can also use custom metrics or external metrics to trigger scaling events, but for now, we'll focus on CPU utilization.

2. Prerequisites for HPA

Before setting up HPA, you need to ensure the following:

Metrics Server Installed: The Metrics Server is required for HPA to collect resource usage information like CPU and memory. You can install it by running:

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlResource Requests and Limits: HPA requires that your deployments specify CPU (or memory) resource requests and limits. For example, in your

deployment.yamlfile, you need to specify these values:resources: requests: cpu: "100m" # The pod requests 100 milli-CPU (10% of a CPU) limits: cpu: "500m" # The pod can use up to 500 milli-CPU (50% of a CPU)

3. Configuring Horizontal Pod Autoscaler

Once the prerequisites are in place, you can create a Horizontal Pod Autoscaler to monitor your application's CPU usage and adjust the number of replicas accordingly.

Here’s how to configure the HPA:

a. Command-Line HPA Setup

You can create an HPA using a single command that specifies the target deployment, the metric (CPU usage), and the desired minimum and maximum number of replicas.

For example, to create an HPA for your bird-api deployment that maintains CPU usage at 50%, with a minimum of 2 replicas and a maximum of 10 replicas:

kubectl autoscale deployment bird-api-release-bird-api --cpu-percent=50 --min=2 --max=10

Explanation:

bird-api-release-bird-api: This is the deployment you want to scale.--cpu-percent=50: This tells the HPA to scale the deployment if the average CPU usage across all pods exceeds 50% of the requested CPU.--min=2: This sets the minimum number of pods to 2, even if the CPU usage is low.--max=10: This sets the maximum number of pods to 10, even if the CPU usage is high.

b. YAML-Based HPA Configuration

You can also create an HPA using a YAML configuration file. Here’s an example YAML for your bird-api deployment:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: bird-api-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: bird-api-release-bird-api # Target deployment to scale

minReplicas: 2 # Minimum number of replicas

maxReplicas: 10 # Maximum number of replicas

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50 # Target average CPU usage (percentage)

Once you create this YAML file, you can apply it with:

kubectl apply -f bird-api-hpa.yaml

4. How HPA Makes Scaling Decisions

Metrics Collection: The HPA controller queries the Metrics Server (or custom/external metrics) to get the current CPU usage of all pods in the target deployment.

Scaling Up: If the average CPU usage across all pods exceeds the target threshold (e.g., 50%), the HPA will increase the number of replicas to distribute the load more evenly.

Scaling Down: If the average CPU usage is below the target threshold, the HPA will reduce the number of replicas to avoid overprovisioning resources.

Cooldown Period: The HPA doesn't immediately scale up or down in response to transient changes in metrics. It has a cooldown period, typically 30 seconds to 1 minute, to prevent rapid, unnecessary scaling.

5. Monitoring HPA

To check the status of your HPA, you can use the following command:

kubectl get hpa

It will show you the current state of the HPA, including the current and desired number of replicas, as well as the observed CPU utilization.

Example output:

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

bird-api-hpa Deployment/bird-api-release-bird-api 60%/50% 2 10 6 2m

6. Scaling Based on Custom Metrics (Optional)

While CPU is the most common metric used for scaling, HPA can also scale based on memory, custom metrics, or even external metrics (e.g., requests per second). Here’s an example of scaling based on memory:

metrics:

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 75 # Scale when memory usage is above 75%

7. Best Practices for HPA

Set appropriate resource requests and limits: Ensure your deployments have proper resource requests and limits, as HPA relies on these to calculate utilization.

Choose sensible min and max replicas: Setting the right min and max replica counts prevents your application from being under or over-provisioned.

Monitor your HPA: Regularly check your HPA’s performance to ensure that it's scaling as expected. Tools like Prometheus and Grafana can provide detailed insights into how your application scales over time.

Test with load: To see how HPA behaves under real-world conditions, perform load tests on your application and monitor how the replicas adjust.

Step 9: Security Best Practices

Enhance Terraform Provisioning Part:

bruno@Batman-2 terraform % tree

.

├── iam-output.tf

├── instanct.tf

└── vpc.tf

1 directory, 3 files

| Area | Best Practice | Explanation |

| SSH Access | Restrict SSH access to trusted IP ranges | Instead of allowing SSH from 0.0.0.0/0, specify trusted IPs for better security. |

| Public Exposure | Limit access to Kubernetes API (port 6443) and NodePort services (30000-32767) | Restrict these ports to trusted IPs rather than exposing them to the public internet. |

| Kubelet API Access | Restrict access to Kubelet API (ports 10250-10255) | Ensure Kubelet ports are accessible only by trusted IPs to prevent unauthorized access. |

| Longhorn Services | Limit access to Longhorn services (ports 9500-9509) | Avoid exposing these ports to the public internet; use a more restricted IP range. |

| Security Groups | Restrict outbound traffic in security groups | Instead of allowing all outbound traffic (0.0.0.0/0), restrict outbound traffic to necessary services and ports. |

| Use Private Subnets | Deploy sensitive resources in private subnets | Keep sensitive infrastructure away from public exposure by placing them in private subnets. |

| IAM Roles | Apply least-privilege IAM roles | Grant EC2 instances only the permissions they need to perform their specific tasks, such as accessing S3 or CloudWatch. |

| System Updates | Automate system updates and patching | Include automatic security updates in the instance's user data section to keep systems secure and up to date. |

| Logging and Monitoring | Enable VPC Flow Logs or AWS CloudTrail for traffic monitoring | Track traffic and actions within the infrastructure to detect unusual activity and improve visibility. |

| Data Encryption | Encrypt sensitive data in transit | Ensure encryption for Kubernetes control plane communication, API traffic, and other sensitive data transmissions. |

| Security Monitoring | Set up security tools like AWS GuardDuty | Use AWS security monitoring services to detect threats and monitor suspicious activity across the infrastructure. |

Enhancing Security on the Kubernetes Cluster

| Security Measure | Purpose | Outcome |

| Ingress Controller with SSL/TLS | Secure access with HTTPS using certificates | Encrypted, secure access to your app |

| Network Policies (Ingress/Egress) | Control traffic flow into and out of your cluster | Reduced attack surface |

| RBAC | Limit access and privileges in the cluster | Minimized access risks |

| Secrets Management | Secure sensitive data such as API keys | Encrypted and restricted access to sensitive info |

| Pod Security Standards (PSS) | Ensure pods run securely (e.g., as non-root users) | Prevent privilege escalation |

| Secure API Access | Restrict and monitor access to the Kubernetes API | Improved control over the control plane |

| Ingress with DNS and Certificates | Automate DNS and certificates for secure and easy access | Simplified management and secure access |