Photo by Mollie Sivaram on Unsplash

Netflix Application Local Deployment : Containerization and CI/CD with Jenkins [Part One]

Introduction

In this series, we'll guide you through the process of deploying a Netflix application locally using a combination of Ubuntu VMs, a Hypervisor, Docker, Jenkins for CI/CD, and later, a Kubernetes cluster. This post covers the initial setup and deployment on an Ubuntu VM.

Environment Overview

Infrastructure

Ubuntu VMs running inside a Hypervisor

Docker-VM for testing the Netflix image

Jenkins Server for CI/CD

Kubernetes Cluster (to be covered in Part Two)

Phase 1: Deploying Netflix Locally on Ubuntu-VM

Step 1: Installing Docker

We'll start by setting up Docker on our Ubuntu VM. Here's a simple bash script to get you started:

#!/bin/bash

sudo apt-get update

sudo apt-get install docker.io -y

sudo usermod -aG docker kato #my case is kato

newgrp docker

sudo chmod 777 /var/run/docker.sock

Login into the vm and then clone the git repo and then go into this directory

kato@docker-srv:~$ systemctl status docker.service

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2024-02-28 11:52:28 UTC; 23min ago

TriggeredBy: ● docker.socket

Docs: https://docs.docker.com

Main PID: 1047 (dockerd)

Tasks: 35

Memory: 140.1M

CGroup: /system.slice/docker.service

└─1047 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.171540357Z" level=warning msg="Error (Unable to complete atomic operation, key modified) deleting object [endpoint 6e91142ca59f5945f3d4>

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.177169868Z" level=info msg="Removing stale sandbox a1b32712306c20ca50ff86aab615d4f242716c1974186c5a56667439c5938643 (1389dac14ffa276459>

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.211344516Z" level=warning msg="Error (Unable to complete atomic operation, key modified) deleting object [endpoint 6e91142ca59f5945f3d4>

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.252405393Z" level=info msg="Default bridge (docker0) is assigned with an IP address 172.17.0.0/16. Daemon option --bip can be used to s>

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.305113059Z" level=info msg="Loading containers: done."

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.619231150Z" level=warning msg="WARNING: No swap limit support"

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.619543471Z" level=info msg="Docker daemon" commit="24.0.5-0ubuntu1~20.04.1" graphdriver=overlay2 version=24.0.5

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.629660386Z" level=info msg="Daemon has completed initialization"

Feb 28 11:52:28 docker-srv dockerd[1047]: time="2024-02-28T11:52:28.772123177Z" level=info msg="API listen on /run/docker.sock"

Feb 28 11:52:28 docker-srv systemd[1]: Started Docker Application Container Engine.

kato@docker-srv:~$ docker build -t netflix .

DEPRECATED: The legacy builder is deprecated and will be removed in a future release.

Install the buildx component to build images with BuildKit:

https://docs.docker.com/go/buildx/

unable to prepare context: unable to evaluate symlinks in Dockerfile path: lstat /home/kato/Dockerfile: no such file or directory

kato@docker-srv:~$ git clone https://github.com/Gatete-Bruno/Netflix-Local.git

Cloning into 'Netflix-Local'...

remote: Enumerating objects: 144, done.

remote: Counting objects: 100% (144/144), done.

remote: Compressing objects: 100% (120/120), done.

remote: Total 144 (delta 12), reused 139 (delta 10), pack-reused 0

Receiving objects: 100% (144/144), 7.60 MiB | 434.00 KiB/s, done.

Resolving deltas: 100% (12/12), done.

kato@docker-srv:~$ cd Netflix-Local/

Step 2: Setting Up Docker and Building Images

Now that Docker is installed on our Ubuntu VM, let's proceed with building and running the Netflix container.

Dockerfile (Check the Source Code)

The Dockerfile used for building the Netflix image is crucial. Please refer to the source code for the exact content of the Dockerfile.

FROM node:16.17.0-alpine as builder

WORKDIR /app

COPY ./package.json .

COPY ./yarn.lock .

RUN yarn install

COPY . .

ARG TMDB_V3_API_KEY

ENV VITE_APP_TMDB_V3_API_KEY=${TMDB_V3_API_KEY}

ENV VITE_APP_API_ENDPOINT_URL="https://api.themoviedb.org/3"

RUN yarn build

FROM nginx:stable-alpine

WORKDIR /usr/share/nginx/html

RUN rm -rf ./*

COPY --from=builder /app/dist .

EXPOSE 80

ENTRYPOINT ["nginx", "-g", "daemon off;"]

kato@docker-srv:~/Netflix-Local$ docker build -t netflix .

Removing intermediate container 4d6d833a0c1b

---> ce5531cc9756

Step 16/16 : ENTRYPOINT ["nginx", "-g", "daemon off;"]

---> Running in 79d4022279d2

Removing intermediate container 79d4022279d2

---> 233443b928e1

Successfully built 233443b928e1

Successfully tagged netflix:latest

kato@docker-srv:~/Netflix-Local$ docker run -d --name netflix -p 8081:80 netflix:latest

e4a0e712a4fa188288f7fc1f3d02df6595c2a6a34b5341d1c21c52b80b580c3e

Netflix is now accessible via ip address of my vm {192.168.1.50:8081}

Understanding APIs

An API (Application Programming Interface) is comparable to a restaurant menu. It serves as a means for different software systems or applications to communicate with each other, enabling the exchange of structured information or functions.

Step 3: Setting Up Netflix API

To interact with Netflix and retrieve information, we'll connect to TMDB (The Movie Database) through its API. Follow these steps:

Open your web browser and visit the TMDB (The Movie Database) website.

Click on the "Sign Up" button to create an account.

Retrieving TMDB API Key

Log in to your TMDB (The Movie Database) account using your username and password.

Navigate to your profile and select "Settings."

Click on "API" in the left-side panel.

Generate a new API key by selecting "Generate New API Key" and accepting the terms and conditions.

Provide essential details like the name and website URL of your Netflix application. Click "Submit."

Your TMDB API key will be generated. Copy the API key for later use in your Netflix application.

Now delete the existing image by

kato@docker-srv:~/Netflix-Local$ docker stop netflix

netflix

kato@docker-srv:~/Netflix-Local$ docker rm netflix

netflix

kato@docker-srv:~/Netflix-Local$ docker rmi 233443b928e1

Untagged: netflix:latest

Deleted: sha256:233443b928e1b47c5295bf3eba71c1f8d4608e446c64fd01d64b0e5439abebbd

Deleted: sha256:ce5531cc975623134e26413d2203457d2502b005c9f5da0af436ba36c3a0cb84

Deleted: sha256:50591ccab29cbfdd8892c7a6f053b050683e22316498f1721eef9af04b20900f

Deleted: sha256:b63b2bb7539ffc4b604298b65ac451994a9f51530efcdb44013b54127c1f4d55

Deleted: sha256:b44e8d779fbea899cd8a186a9ef912ddabe7abd74df13ea4c3479cb939224bb2

Deleted: sha256:57dbe92c465bf13a1f4b31ce9e8dea7a5d555830203575637c489d0f16565464

Deleted: sha256:2f054bd14003950d35f5f72bcc5ad4414f6fa233e8769010e5633b4b3332e2cc

kato@docker-srv:~/Netflix-Local$

Run the new container

kato@docker-srv:~/Netflix-Local$ docker build -t netflix:latest --build-arg TMDB_V3_API_KEY=d7ce824b7a096ea94194a5d7dc4ce8dc .

DEPRECATED: The legacy builder is deprecated and will be removed in a future release.

Install the buildx component to build images with BuildKit:

https://docs.docker.com/go/buildx/

Sending build context to Docker daemon 16.26MB

Step 1/16 : FROM node:16.17.0-alpine as builder

---> 5dcd1f6157bd

Step 2/16 : WORKDIR /app

---> Using cache

---> 2c2db45329ad

Step 3/16 : COPY ./package.json .

---> Using cache

---> dc6d190fa818

Step 4/16 : COPY ./yarn.lock .

---> Using cache

---> 0edcb0863146

Step 5/16 : RUN yarn install

---> Using cache

---> 84ac66766224

Step 6/16 : COPY . .

---> Using cache

---> 158816abc572

Step 7/16 : ARG TMDB_V3_API_KEY

---> Using cache

---> 2af876c89c53

Step 8/16 : ENV VITE_APP_TMDB_V3_API_KEY=${TMDB_V3_API_KEY}

---> Running in 1e6251d28d1a

Removing intermediate container 1e6251d28d1a

---> 74f885ad0673

Step 9/16 : ENV VITE_APP_API_ENDPOINT_URL="https://api.themoviedb.org/3"

---> Running in 4679fa88116a

Removing intermediate container 4679fa88116a

---> dc82c5486a6a

Step 10/16 : RUN yarn build

---> Running in 63fd3d5257f7

yarn run v1.22.19

$ tsc && vite build

vite v3.2.2 building for production...

transforming...

✓ 1513 modules transformed.

rendering chunks...

dist/assets/ajax-loader.e7b44c86.gif 4.08 KiB

dist/assets/slick.12459f22.svg 2.10 KiB

dist/index.html 0.77 KiB

dist/assets/VideoItemWithHover.a2a1ef6e.js 1.34 KiB / gzip: 0.78 KiB

dist/assets/GenreExplore.de64d1ed.js 1.41 KiB / gzip: 0.82 KiB

dist/assets/WatchPage.fd1739d2.js 25.86 KiB / gzip: 9.00 KiB

dist/assets/HomePage.dc081027.js 69.76 KiB / gzip: 20.00 KiB

dist/assets/index.a62a091f.css 55.88 KiB / gzip: 15.19 KiB

dist/assets/index.e81cf057.js 1149.24 KiB / gzip: 356.33 KiB

(!) Some chunks are larger than 500 KiB after minification. Consider:

- Using dynamic import() to code-split the application

- Use build.rollupOptions.output.manualChunks to improve chunking: https://rollupjs.org/guide/en/#outputmanualchunks

- Adjust chunk size limit for this warning via build.chunkSizeWarningLimit.

Done in 28.49s.

Removing intermediate container 63fd3d5257f7

---> e5eae80c4c54

Step 11/16 : FROM nginx:stable-alpine

---> 2c3daafa6cc7

Step 12/16 : WORKDIR /usr/share/nginx/html

---> Running in 7988bb2076f7

Removing intermediate container 7988bb2076f7

---> 1762cd82b6e1

Step 13/16 : RUN rm -rf ./*

---> Running in 23828162c3e5

Removing intermediate container 23828162c3e5

---> 4ff5da2af0c1

Step 14/16 : COPY --from=builder /app/dist .

---> 0c839f669499

Step 15/16 : EXPOSE 80

---> Running in ad0982f67f83

Removing intermediate container ad0982f67f83

---> cd58185cd00f

Step 16/16 : ENTRYPOINT ["nginx", "-g", "daemon off;"]

---> Running in 206fc0cac339

Removing intermediate container 206fc0cac339

---> c94502f11135

Successfully built c94502f11135

Successfully tagged netflix:latest

kato@docker-srv:~/Netflix-Local$

kato@docker-srv:~/Netflix-Local$ docker run -d -p 8081:80 netflix

ad1ba742652241681ec27114e474f6771448454f7e834294833036be9947bbc8

Netflix now fully Deployed connecting to the Database using the API we generated earlier

New Ubuntu VM that will be used for :

Ubuntu VM 8GB RAM, 120GB of Disk Space

Sonarqube

Trivy

Jenkins

Phase 2 : Implementation of security with sonarqube and trivy

kato@jenkins-srv:~$ git clone https://github.com/Gatete-Bruno/Netflix-Local.git

Cloning into 'Netflix-Local'...

remote: Enumerating objects: 144, done.

remote: Counting objects: 100% (144/144), done.

remote: Compressing objects: 100% (120/120), done.

remote: Total 144 (delta 12), reused 139 (delta 10), pack-reused 0

Receiving objects: 100% (144/144), 7.60 MiB | 1.14 MiB/s, done.

Resolving deltas: 100% (12/12), done.

kato@jenkins-srv:~$ cd Netflix-Local/

kato@jenkins-srv:~/Netflix-Local$ ls

Dockerfile IaC Kubernetes README.md index.html package.json pipeline.txt public src tsconfig.json tsconfig.node.json vercel.json vite.config.ts yarn.lock

kato@jenkins-srv:~/Netflix-Local$ cd IaC/

kato@jenkins-srv:~/Netflix-Local/IaC$ ls

Bash-Scripts K8s-Kubespray

kato@jenkins-srv:~/Netflix-Local/IaC$ cd Bash-Scripts/

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ ls

docker.sh grafana.sh jenkins.sh node_exporter.sh prometheus.sh sonarqube.sh trivy.sh

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ chmod +x docker.sh

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ ./docker.sh

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ systemctl status docker.service

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2024-02-29 05:48:07 UTC; 1min 30s ago

TriggeredBy: ● docker.socket

Docs: https://docs.docker.com

Main PID: 5393 (dockerd)

Tasks: 10

Memory: 27.9M

CGroup: /system.slice/docker.service

└─5393 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Feb 29 05:48:06 jenkins-srv systemd[1]: Starting Docker Application Container Engine...

Feb 29 05:48:06 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:06.956867965Z" level=info msg="Starting up"

Feb 29 05:48:06 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:06.959643512Z" level=info msg="detected 127.0.0.53 nameserver, assuming systemd-resolved, so using resolv.conf: /run/systemd/resolve/reso>

Feb 29 05:48:07 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:07.033841620Z" level=info msg="Loading containers: start."

Feb 29 05:48:07 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:07.253211810Z" level=info msg="Loading containers: done."

Feb 29 05:48:07 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:07.275265688Z" level=warning msg="WARNING: No swap limit support"

Feb 29 05:48:07 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:07.275316566Z" level=info msg="Docker daemon" commit="24.0.5-0ubuntu1~20.04.1" graphdriver=overlay2 version=24.0.5

Feb 29 05:48:07 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:07.275409690Z" level=info msg="Daemon has completed initialization"

Feb 29 05:48:07 jenkins-srv dockerd[5393]: time="2024-02-29T05:48:07.306866708Z" level=info msg="API listen on /run/docker.sock"

Feb 29 05:48:07 jenkins-srv systemd[1]: Started Docker Application Container Engine.

lines 1-21/21 (END)

SonarQube is a powerful "code quality detective" tool designed for software developers. It functions as a static code analysis platform, scanning your codebase to identify and report various issues such as bugs, security vulnerabilities, code smells, and other potential pitfalls. By leveraging SonarQube, developers gain valuable insights and recommendations to enhance the overall quality and maintainability of their software projects.

Key Features:

Static Code Analysis: Detects code issues without executing the code.

Security Vulnerability Scanning: Identifies potential security risks within the code.

Code Smell Detection: Flags areas of code that may require refactoring.

Quality Metrics: Provides metrics and insights for code quality improvement.

Integration with CI/CD: Seamlessly integrates with Continuous Integration and Continuous Deployment pipelines.

In this setup, we'll utilize Docker to conveniently deploy and manage SonarQube, making it an integral part of your development environment.

docker run -d --name sonar -p 9000:9000 sonarqube:lts-community

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ docker run -d --name sonar -p 9000:9000 sonarqube:lts-community

Unable to find image 'sonarqube:lts-community' locally

lts-community: Pulling from library/sonarqube

d66d6a6a3687: Pull complete

18f947fdc0fc: Pull complete

5374706a264d: Pull complete

c9aeff6b625d: Pull complete

82faf7b7220d: Pull complete

86c5dcbfeabd: Pull complete

3d8c05302d61: Pull complete

4f4fb700ef54: Pull complete

Digest: sha256:f5f3d1d45484581d381b63f7dae56a6887a24cb4b068b8da561c84703833013c

Status: Downloaded newer image for sonarqube:lts-community

846fc66db6074f9da428a24ab6e650f6302835a15158e4867344712055d85b7e

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$

Accessing SonarQube

Open your web browser and navigate to http://192.168.1.55:9000.

Log in with the following credentials:

Username: admin

Password: admin

Upon logging in, update your password to enhance security.

Trivy acts as a vigilant "security guard" for your software, specializing in scrutinizing components, particularly libraries, that your application relies on. This tool plays a crucial role in identifying and mitigating potential security vulnerabilities, ensuring the safety and resilience of your software against cyber threats and attacks.

Key Features:

Vulnerability Scanning: Detects and reports known security issues in software components.

Library Inspection: Focuses on scrutinizing libraries and dependencies.

Threat Mitigation: Provides guidance on addressing identified security vulnerabilities.

Container Security: Especially useful for scanning vulnerabilities in containerized environments.

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ ls -la

total 36

drwxrwxr-x 2 kato kato 4096 Feb 29 05:46 .

drwxrwxr-x 4 kato kato 4096 Feb 29 05:46 ..

-rwxrwxr-x 1 kato kato 164 Feb 29 05:46 docker.sh

-rw-rw-r-- 1 kato kato 703 Feb 29 05:46 grafana.sh

-rw-rw-r-- 1 kato kato 866 Feb 29 05:46 jenkins.sh

-rw-rw-r-- 1 kato kato 1101 Feb 29 05:46 node_exporter.sh

-rw-rw-r-- 1 kato kato 1655 Feb 29 05:46 prometheus.sh

-rw-rw-r-- 1 kato kato 2394 Feb 29 05:46 sonarqube.sh

-rw-rw-r-- 1 kato kato 537 Feb 29 05:46 trivy.sh

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ chmod +x trivy.sh

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ ./trivy.sh

#!/bin/bash

# Step a: Install required packages

sudo apt-get install -y wget apt-transport-https gnupg lsb-release

# Step b: Add the Trivy repository key

wget -qO - https://aquasecurity.github.io/trivy-repo/deb/public.key | sudo apt-key add -

# Step c: Add the Trivy repository to sources list

echo "deb https://aquasecurity.github.io/trivy-repo/deb $(lsb_release -sc) main" | sudo tee -a /etc/apt/sources.list.d/trivy.list

# Step d: Update the package list

sudo apt-get update

# Step e: Install Trivy

sudo apt-get install -y trivy

kato@jenkins-srv:~/Netflix-Local$ docker images ls

REPOSITORY TAG IMAGE ID CREATED SIZE

kato@jenkins-srv:~/Netflix-Local$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8fd504be93b8 netflix "nginx -g 'daemon of…" 4 minutes ago Up 4 minutes 0.0.0.0:8081->80/tcp, :::8081->80/tcp agitated_banzai

846fc66db607 sonarqube:lts-community "/opt/sonarqube/dock…" 22 minutes ago Up 22 minutes 0.0.0.0:9000->9000/tcp, :::9000->9000/tcp sonar

kato@jenkins-srv:~/Netflix-Local$ sudo trivy image netflix:latest

Automating Deployment with Jenkins Pipeline

In this phase, we take the deployment process to the next level by introducing automation through a Jenkins Pipeline. A Jenkins Pipeline is a powerful way to define and manage the entire software delivery process, from building the code to deployment and testing. This automation streamlines development workflows, enhances consistency, and allows for efficient collaboration among team members.

Key Steps in Jenkins Pipeline:

Version Control Integration: Connect Jenkins to your version control system (e.g., Git) to retrieve the latest code.

Build Stage: Compile and build your application, ensuring that it is ready for deployment.

Test Stage: Execute tests to validate the functionality and integrity of your code.

Security Scanning: Integrate tools like Trivy and SonarQube to scan for security vulnerabilities and code quality issues.

Artifact Generation: Create deployable artifacts, such as Docker images, that encapsulate your application.

Deployment Stage: Deploy the artifacts to the target environment, whether it's a local server or a Kubernetes cluster.

Post-Deployment Checks: Perform additional checks or tests after deployment to ensure everything is functioning as expected.

Notification: Notify relevant stakeholders about the deployment status or any issues.

BashScript for jenkins

#!/bin/bash

sudo apt update -y

sudo apt install default-jdk -y

wget -O - https://packages.adoptium.net/artifactory/api/gpg/key/public | tee /etc/apt/keyrings/adoptium.asc

echo "deb [signed-by=/etc/apt/keyrings/adoptium.asc] https://packages.adoptium.net/artifactory/deb $(awk -F= '/^VERSION_CODENAME/{print$2}' /etc/os-release) main" | tee /etc/apt/sources.list.d/adoptium.list

sudo apt update -y

sudo apt install temurin-17-jdk -y

/usr/bin/java --version

curl -fsSL https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key | sudo tee /usr/share/keyrings/jenkins-keyring.asc > /dev/null

echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] https://pkg.jenkins.io/debian-stable binary/ | sudo tee /etc/apt/sources.list.d/jenkins.list > /dev/null

sudo apt-get update -y

sudo apt-get install jenkins -y

sudo systemctl start jenkins

sudo systemctl status jenkins

Jenkins Pipeline: Essential Plugins Installation

Prerequisites:

Before setting up the Jenkins Pipeline, ensure that the following plugins are installed to enhance the functionality and address specific needs of the pipeline:

Eclipse Temurin Installer: Simplifies the installation of Java runtimes, making it easier to manage Java versions.

SonarQube Scanner: Integrates SonarQube analysis into your Jenkins builds, providing insights into code quality and security.

NodeJs Plugin: Enables the use of Node.js in Jenkins jobs, allowing for the execution of JavaScript-based build steps.

Email Extension Plugin: Extends email notification functionality, providing more options for sending notifications in Jenkins.

OWASP Plugin: Acts as a security assistant, leveraging OWASP guidelines to scan web applications for common security threats and vulnerabilities.

Prometheus Metrics: Collects and exports metrics for Jenkins, facilitating monitoring through Grafana dashboards.

Docker-related Plugins: Download and install plugins related to Docker to support building, pushing, and deploying Docker images.

Installation Instructions:

Open your Jenkins instance.

Navigate to the Manage Jenkins page.

Click on Manage Plugins.

Switch to the Available tab.

Search for each plugin by name in the Filter box.

Select the checkbox next to each plugin you want to install.

Click on the Install without restart button to ensure a smooth installation process.

Once installed, verify that the plugins appear in the Installed tab.Step 2 → add credentials of Sonarqube and Docker

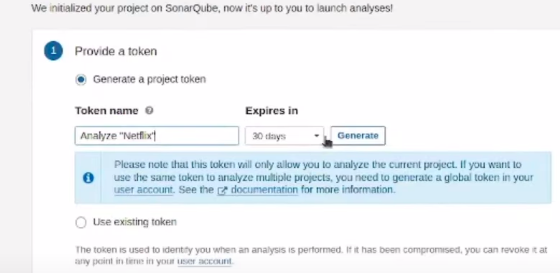

1st we genrate a token for sonarqube to use in jenkins credentials as secret text

SonarQube Credentials Setup:

Open your SonarQube instance at http://192.168.1.55:9000.

Log in with your SonarQube username and password.

Navigate to Security -> Users -\> Token -> Generate Token.

Copy the generated token.

Jenkins SonarQube Credential Configuration:

Open Jenkins and go to Manage Jenkins -> Credentials -> (select the domain if applicable) -> (select the "Global" domain) -> Add Credentials.

Choose the Kind as Secret text from the dropdown.

In the Secret field, paste the SonarQube token you copied.

Set the ID to "sonar-token" for easy reference.

Click on OK to create the credentials.

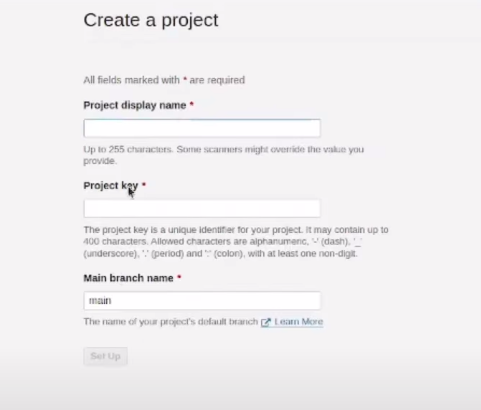

Setting Up SonarQube Projects for Jenkins:

Visit your SonarQube server.

Click on Projects.

Click on Set Up to configure the project.

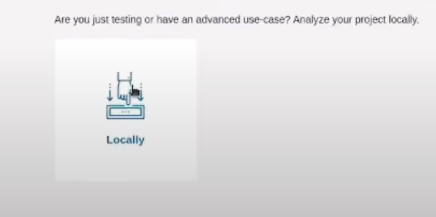

To integrate SonarQube with Jenkins, you need to generate the necessary configuration commands based on your operating system. Follow these steps:

Visit your SonarQube server.

Click on Projects.

In the Name field, type "Netflix."

Click on Generate to get the configuration commands.

Select your operating system (OS).

Setup docker credentials

sonar-scanner \

-Dsonar.projectKey=Netflix \

-Dsonar.sources=. \

-Dsonar.host.url=http://192.168.1.55:9000 \

-Dsonar.login=sqp_16885ecaaa35acb4ea79b671d5c49269bf6eacf4

To seamlessly integrate DockerHub with Jenkins, follow these steps:

Open Jenkins.

Navigate to Manage Jenkins.

Click on Credentials.

Choose (global).

Click on Add Credentials.

Fill in the following details:

Username: Your DockerHub username

Password: Your DockerHub password

ID: docker

Click on OK or Apply to save the credentials.

Step 3: Jenkins Tools Setup

Open Jenkins.

Navigate to Manage Jenkins.

JDK Configuration

Click on Global Tool Configuration.

Under JDK, click on Add JDK.

Choose Adoptium.net installer.

Select version 17.0.8.1+1 and name it as jdk 17.

Node.js Configuration

Under Global Tool Configuration, click on Add NodeJS.

Enter name as node16 and choose version 16.2.0.

Docker Configuration

Still in Global Tool Configuration, click on Add Docker.

Name it as docker and choose to download from docker.com.

SonarQube Scanner Configuration

Continue in Global Tool Configuration, click on SonarQube Scanner.

Name it as sonar-scanner.

OWASP Dependency Check Configuration

In the same Global Tool Configuration, click on Dependency-Check.

Name it as DP-Check and select to install from github.com.

Step 4: Configure Global Settings for SonarQube

Go to Manage Jenkins.

Click on Configure System.

Scroll down to SonarQube servers and add a new server.

Name: sonar-server

Server URL: http://192.168.1.55:9000

Server authentication token: sonar-token

Remember to log in to Docker on your Jenkins server.

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$ docker login

Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.

Username:

Password:

WARNING! Your password will be stored unencrypted in /home/kato/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

kato@jenkins-srv:~/Netflix-Local/IaC/Bash-Scripts$

Remember to do this

1. Add Jenkins User to Docker Group:

Ensure that the Jenkins user is added to the docker group so that it has the necessary permissions to access the Docker daemon socket.

sudo usermod -aG docker jenkins

After running this command, restart the Jenkins service:

sudo systemctl restart jenkins

Step 5: Running the Pipeline

In Jenkins, go to New Item.

Select Pipeline and name it as netflix.

Scroll down to the Pipeline section.

Choose Pipeline script from SCM.

Select Git for SCM.

Enter the repository URL where your Jenkinsfile is located.

Save the pipeline configuration.

Now, click on Build Now to run the pipeline.

- scroll down to the pipeline script and copy paste the following code

pipeline {

agent any

tools {

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME = tool 'sonar-scanner'

}

stages {

stage('clean workspace') {

steps {

cleanWs()

}

}

stage('Checkout from Git') {

steps {

git branch: 'main', url: 'https://github.com/Gatete-Bruno/DevSecOps-Project.git'

}

}

stage("Sonarqube Analysis ") {

steps {

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Netflix \

-Dsonar.projectKey=Netflix '''

}

}

}

stage("quality gate") {

steps {

script {

waitForQualityGate abortPipeline: false, credentialsId: 'Sonar-token'

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('TRIVY FS SCAN') {

steps {

sh "trivy fs . > trivyfs.txt"

}

}

stage("Docker Build & Push") {

steps {

script {

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

sh """

docker build --build-arg TMDB_V3_API_KEY=d7ce824b7a096ea94194a5d7dc4ce8dc -t bruno74t/netflix2:latest .

docker push bruno74t/netflix2:latest

"""

}

}

}

}

stage("TRIVY") {

steps {

sh "trivy image bruno74t/netflix2:latest > trivyimage.txt"

}

}

stage('Deploy to container') {

steps {

sh 'docker stop netflix || true' // Stop the container if running (ignore errors if it doesn't exist)

sh 'docker rm netflix || true' // Remove the container if exists (ignore errors if it doesn't exist)

sh 'docker run -d --name netflix -p 8081:80 bruno74t/netflix2:latest'

}

}

}

}

This Jenkins Pipeline automates the deployment of the Netflix application locally, utilizing Docker and Jenkins as a CI/CD tool. The pipeline is divided into multiple phases, each focusing on different aspects of the deployment process.

Phases

Phase 1: Deploy Netflix Locally on Ubuntu VM

Install Docker on the Ubuntu VM using a Bash script.

Set up Docker and build Netflix images.

Deploy Netflix locally on the Ubuntu VM.

Phase 2: Implementation of Security with SonarQube and Trivy

Set up a new Ubuntu VM with SonarQube, Trivy, and Jenkins.

Implement security measures using SonarQube and Trivy.

Phase 3: Automation with Jenkins Pipeline

Install suggested plugins for the Jenkins pipeline.

Set up SonarQube credentials and projects.

Configure DockerHub credentials in Jenkins.

Configure tools in Jenkins (JDK, Node.js, Docker, SonarQube Scanner, and OWASP Dependency Check).

Configure global settings for SonarQube in Jenkins.

Run the Jenkins Pipeline for Netflix application deployment.

Running the Pipeline

Navigate to Jenkins and create a new item named netflix with the pipeline script from SCM.

Provide the repository URL where the Jenkinsfile is located.

Save the pipeline configuration.

Click on Build Now to trigger the pipeline.

Pipeline Flow

The pipeline will build the Docker image, perform security checks using SonarQube and Trivy, and finally, push the image to DockerHub.

Monitoring Setup with Prometheus and Grafana

In this phase, we will set up monitoring for the Netflix application using Prometheus and Grafana. Prometheus acts as a data collector, continuously gathering metrics about the application's performance. Grafana provides a visual representation of these metrics through charts and graphs, making it easier to monitor and manage the application.

Prerequisites

A new Ubuntu VM for monitoring with the following specifications:

2 CPU cores

4 GB of memory

20 GB of free disk space

Step 1: Setup Ubuntu VM for Monitoring

Visit http://192.168.1.50:9090 to access the Prometheus webpage.

Step 2: Installing Node Exporter

Node Exporter is a reporter tool for Prometheus, collecting information about the computer (node) to monitor its performance. It gathers data on CPU usage, memory, disk space, and network activity.

Node Port Exporter

Node Port Exporter focuses on collecting information about network ports, providing insights into open ports and data flow. This is crucial for monitoring network-related activities and ensuring smooth and secure application operation.Bash Script for Installation

#!/bin/bash

# Step 1: Create a system user for Node Exporter and download Node Exporter

sudo useradd --system --no-create-home --shell /bin/false node_exporter

wget https://github.com/prometheus/node_exporter/releases/download/v1.6.1/node_exporter-1.6.1.linux-amd64.tar.gz

# Step 2: Extract Node Exporter files, move the binary, and clean up

tar -xvf node_exporter-1.6.1.linux-amd64.tar.gz

sudo mv node_exporter-1.6.1.linux-amd64/node_exporter /usr/local/bin/

rm -rf node_exporter*

# Step 3: Create a systemd unit configuration file for Node Exporter

sudo tee /etc/systemd/system/node_exporter.service > /dev/null <<EOL

[Unit]

Description=Node Exporter

Wants=network-online.target

After=network-online.target

StartLimitIntervalSec=500

StartLimitBurst=5

[Service]

User=node_exporter

Group=node_exporter

Type=simple

Restart=on-failure

RestartSec=5s

ExecStart=/usr/local/bin/node_exporter --collector.logind

[Install]

WantedBy=multi-user.target

EOL

# Step 4: Enable and start Node Exporter

sudo systemctl enable node_exporter

sudo systemctl start node_exporter

sudo systemctl status node_exporter

You can access Node Exporter metrics in Prometheus.

Configure Prometheus Plugin Integration:

Bash Script for installing Prometheus

#!/bin/bash # 1. Create dedicated Linux user for Prometheus and download Prometheus sudo useradd --system --no-create-home --shell /bin/false prometheus wget https://github.com/prometheus/prometheus/releases/download/v2.47.1/prometheus-2.47.1.linux-amd64.tar.gz # 2. Extract Prometheus files, move them, and create directories tar -xvf prometheus-2.47.1.linux-amd64.tar.gz cd prometheus-2.47.1.linux-amd64/ sudo mkdir -p /data /etc/prometheus sudo mv prometheus promtool /usr/local/bin/ sudo mv consoles/ console_libraries/ /etc/prometheus/ sudo mv prometheus.yml /etc/prometheus/prometheus.yml # 3. Set ownership for directories sudo chown -R prometheus:prometheus /etc/prometheus/ /data/ # 4. Create a systemd unit configuration file for Prometheus sudo tee /etc/systemd/system/prometheus.service > /dev/null <<EOL [Unit] Description=Prometheus Wants=network-online.target After=network-online.target StartLimitIntervalSec=500 StartLimitBurst=5 [Service] User=prometheus Group=prometheus Type=simple Restart=on-failure RestartSec=5s ExecStart=/usr/local/bin/prometheus \ --config.file=/etc/prometheus/prometheus.yml \ --storage.tsdb.path=/data \ --web.console.templates=/etc/prometheus/consoles \ --web.console.libraries=/etc/prometheus/console_libraries \ --web.listen-address=0.0.0.0:9090 \ --web.enable-lifecycle [Install] WantedBy=multi-user.target EOL # 5. Restart systemd and start Prometheus service sudo systemctl daemon-reload sudo systemctl enable prometheus sudo systemctl start prometheus # 6. Check Prometheus status sudo systemctl status prometheus echo "Prometheus installation, configuration, and start completed."

edit the prometheus.yml file to moniter anything

sudo vi /etc/prometheus/prometheus.yml

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'node_exporter'

static_configs:

- targets: ['localhost:9100']

- job_name: 'jenkins'

metrics_path: '/prometheus'

static_configs:

- targets: ['192.168.1.55:8080']

add the above code with proper indentation like this

a. Check the validity of the configuration file

promtool check config /etc/prometheus/prometheus.yml

b. Reload the Prometheus configuration without restarting

curl -X POST http://localhost:9090/-/reload

go to your prometheus tab again and click on status and select targets you will there is three targets present as we enter in yaml file for monitoring

prometheus targets dashboard

Setup Grafana

Bash Script to install Grafana

#!/bin/bash

# Update package list

sudo apt-get update

# Install required packages

sudo apt-get install -y apt-transport-https software-properties-common

# Add the GPG Key for Grafana

wget -q -O - https://packages.grafana.com/gpg.key | sudo apt-key add -

# Add the repository for Grafana stable releases

echo "deb https://packages.grafana.com/oss/deb stable main" | sudo tee -a /etc/apt/sources.list.d/grafana.list

# Update package list again

sudo apt-get update

# Install Grafana

sudo apt-get -y install grafana

# Enable and start Grafana service

sudo systemctl enable grafana-server

sudo systemctl start grafana-server

# Check the status of Grafana service

sudo systemctl status grafana-server

Access Grafan on the same Ip address on port 3000

username = admin, password =admin

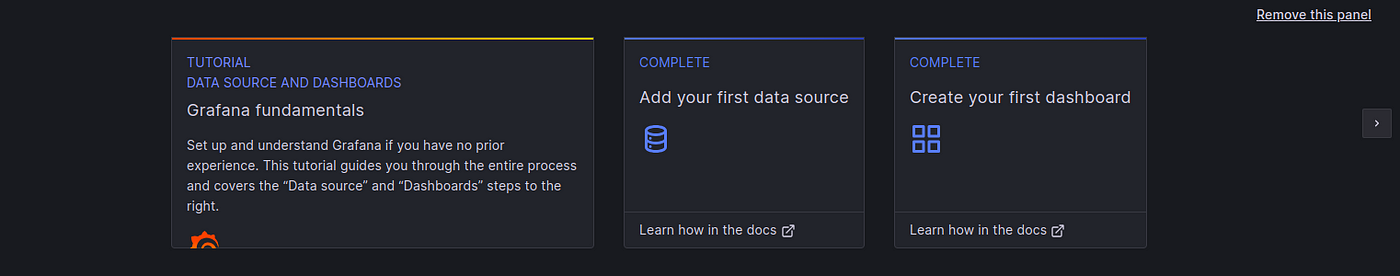

Add Prometheus Data Source:

To visualize metrics, you need to add a data source.

Follow these steps:

- Click on the gear icon (⚙️) in the left sidebar to open the “Configuration” menu.

- Select “Data Sources.”

- Click on the “Add data source” button.

- Choose “Prometheus” as the data source type.

- In the “HTTP” section:

- Set the “URL” to

http://localhost:9090(assuming Prometheus is running on the same server).

- Click the “Save & Test” button to ensure the data source is working.

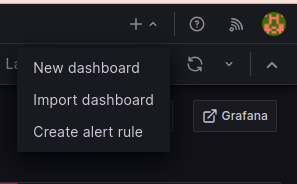

Import a Dashboard

Importing a dashboard in Grafana is like using a ready-made template to quickly create a set of charts and graphs for monitoring your data, without having to build them from scratch.

- Click on the “+” (plus) icon in the left sidebar to open the “Create” menu.

- Select “Dashboard.”

- Click on the “Import” dashboard option.

- Enter the dashboard code you want to import (e.g., code 9964).

- Click the “Load” button.

- Select the data source you added (Prometheus) from the dropdown.

Click on the “Import” button.

Configure global setting for promotheus

go to manage jenkins →system →search for promotheus — apply →save

import a dashboard for jenkins

- Click on the “+” (plus) icon in the left sidebar to open the “Create” menu.

- Select “Dashboard.”

- Click on the “Import” dashboard option.

- Enter the dashboard code you want to import (9964).

- Click the “Load” button.

- Select the data source you added (Prometheus) from the dropdown.

![Netflix Application Local Deployment : Containerization and CI/CD with Jenkins [Part One]](https://cdn.hashnode.com/res/hashnode/image/stock/unsplash/yubCnXAA3H8/upload/3837a69e4dad5e0e18e6ceef08d69ae3.jpeg?w=1600&h=840&fit=crop&crop=entropy&auto=compress,format&format=webp)