Pre-requistes

One Master (Controller)

Two Worker Nodes

How to install Kubernetes locally check out my previous blog:

How to a Bootstrap Kubernetes Cluster

Install Node Exporter using Helm:

Efficiently monitor your Kubernetes cluster by deploying the Prometheus Node Exporter using Helm. Helm streamlines the process, providing a standardized and customizable approach to set up and manage this essential monitoring tool.

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

kubectl create namespace prometheus-node-exporter

helm install prometheus-node-exporter prometheus-community/prometheus-node-exporter --namespace=prometheus-node-exporter

kato@master1:~$ kubectl get pods -n prometheus-node-exporter

NAME READY STATUS RESTARTS AGE

prometheus-node-exporter-ngggc 1/1 Running 0 84s

prometheus-node-exporter-qf9wt 1/1 Running 0 84s

prometheus-node-exporter-sdxl2 1/1 Running 0 84s

Add a Job to Scrape Metrics on nodeip:9001/metrics in prometheus.yml:

Remember we have a prometheus server running in our environment we used in part one

Install Argocd

ArgoCD is a declarative, GitOps continuous delivery tool for Kubernetes applications. It allows you to maintain and manage your Kubernetes applications using Git repositories as the source of truth for declarative infrastructure manifests

Installation steps :

helm repo add argo https://argoproj.github.io/argo-helm

helm repo update

kubectl create namespace argocd

helm install my-argo-cd argo/argo-cd --version 5.0.0 -n argocd

watch kubectl get all -n argocd

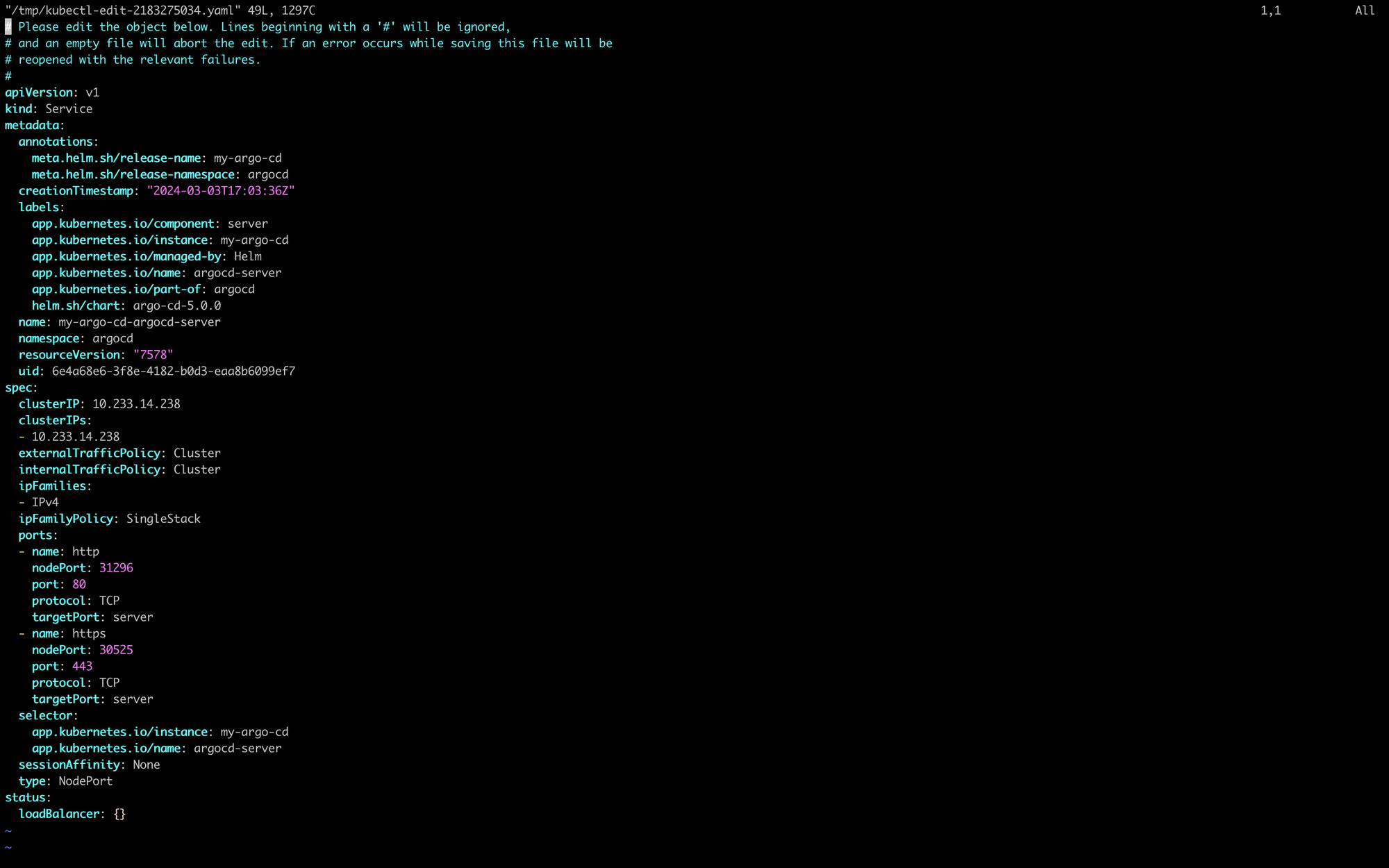

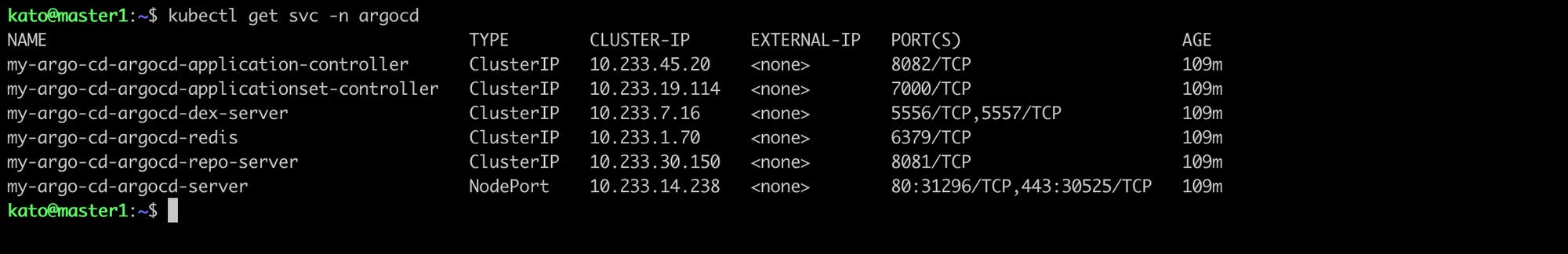

Expose argocd-server

By default argocd-server is not publicaly exposed. For the purpose of this workshop, we will use a NodePort to make it usable:

kato@master1:~$ kubectl edit svc my-argo-cd-argocd-server -n argocd

For login purposes

username: admin and for password

kato@master1:~$ export ARGO_PWD=$(kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d)

kato@master1:~$ echo $ARGO_PWD

y9o7F4hWV-TDhtif

kato@master1:~$

To acces the argocd UI :

We shall access one of the nodeportIPs in this case we shall access

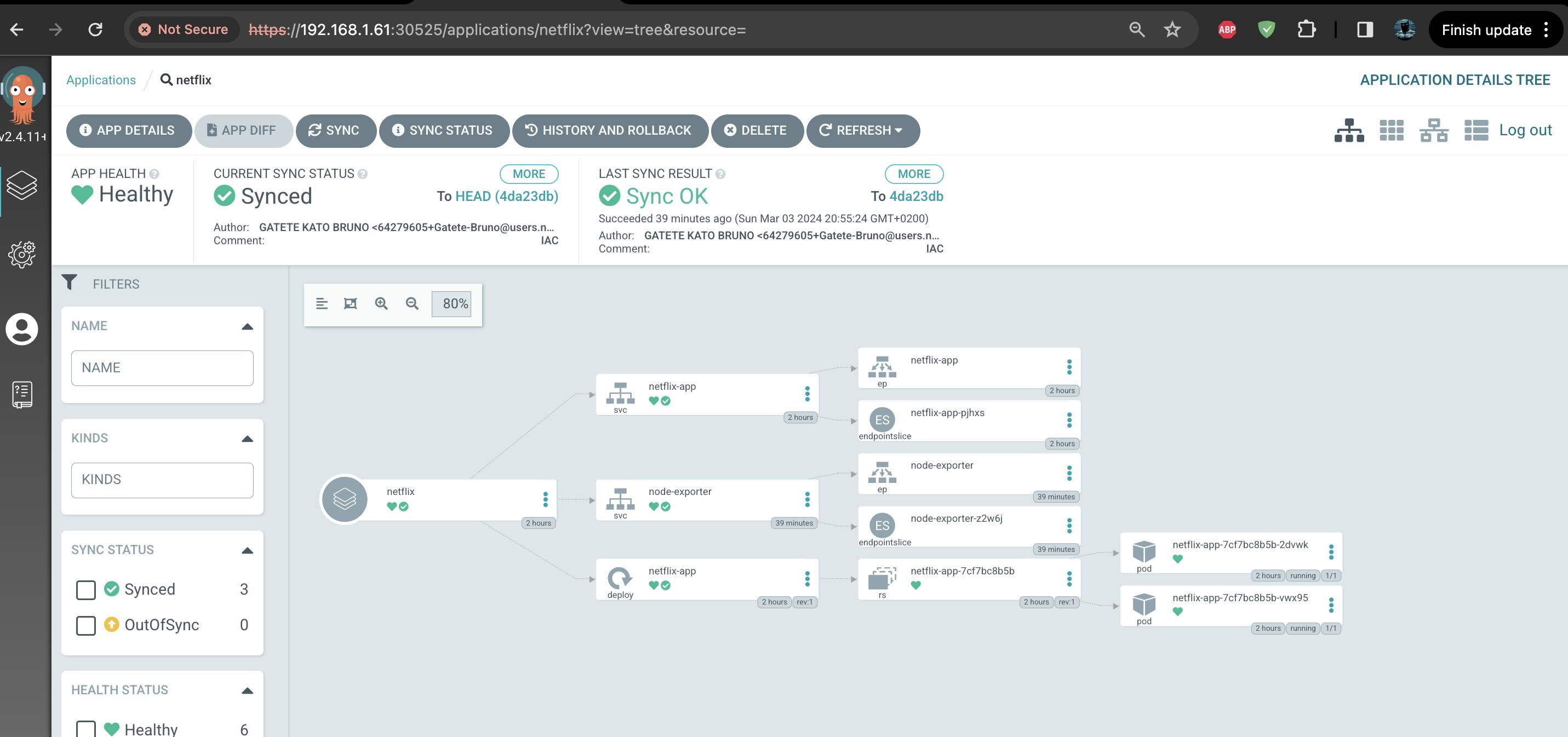

Deploy Netflix Application with ArgoCD

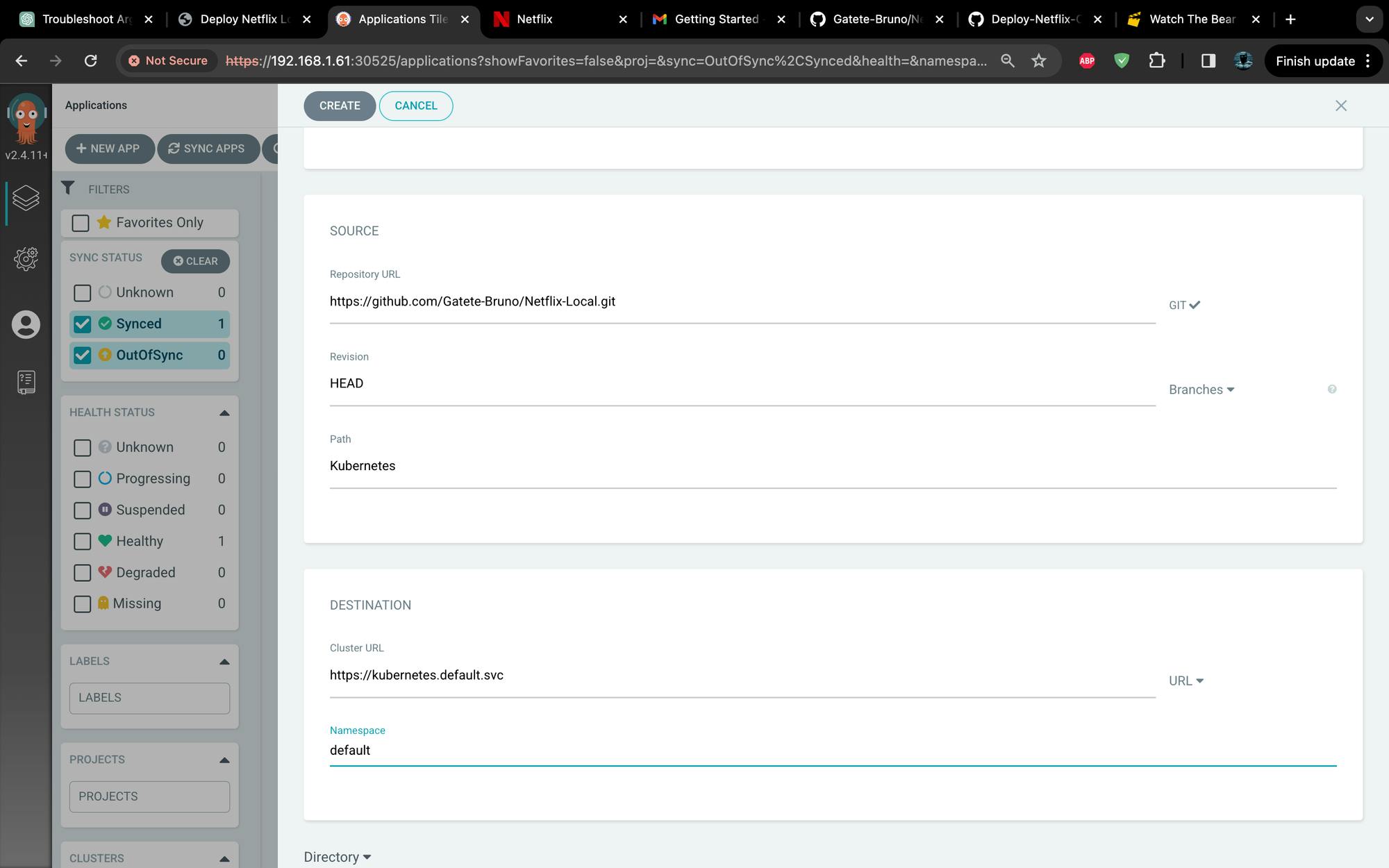

Configure ArgoCD Application:

In the ArgoCD web interface or using the ArgoCD CLI, create a new application.

Provide a name for your application.

Specify the source type as

Git.Set the repository URL to your Git repository's URL (e.g.,

https://github.com/Gatete-Bruno/Netflix-Local).Choose the target namespace where your application will be deployed. in my case i chose default

Set Application Path:

If your application is in a subdirectory within your Git repository, set the

pathfield to the relative path of your application within the repository.The path that contains the kubernetes yaml files is Kubernetes

Configure Sync Policy:

Configure how often ArgoCD should check for updates and automatically sync your application. This is typically done in the "Sync Policy" section.

I used Manual option

Define the Cluster:

- Specify the cluster where you want to deploy your application. This is usually done in the "Cluster" section of the application configuration.

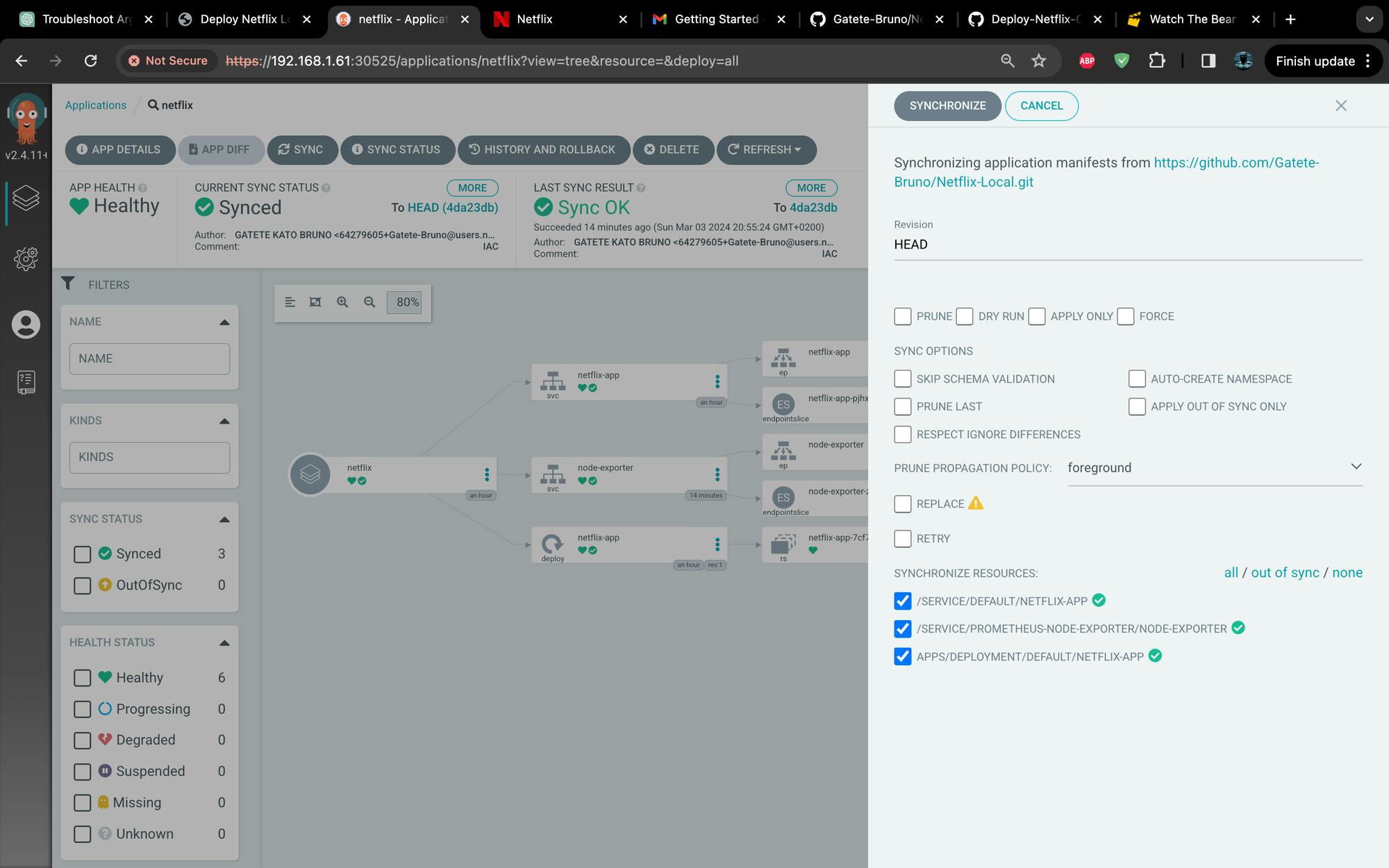

Sync the Application:

Trigger an initial sync of your application either through the ArgoCD web interface or using the CLI. This will deploy your application to the specified target cluster.

The Application is accessible on one of the nodeIPs

kato@master1:~$ kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master1 Ready control-plane,master 3h10m v1.23.10 192.168.1.60 <none> Ubuntu 20.04.5 LTS 5.4.0-169-generic docker://20.10.8 node1 Ready worker 3h10m v1.23.10 192.168.1.61 <none> Ubuntu 20.04.5 LTS 5.4.0-169-generic docker://20.10.8 node2 Ready worker 3h10m v1.23.10 192.168.1.62 <none> Ubuntu 20.04.5 LTS 5.4.0-169-generic docker://20.10.8 kato@master1:~$

![Local Netflix Application Deployment with ArgoCD on Kubernetes [Part Two]](https://cdn.hashnode.com/res/hashnode/image/upload/v1709495038245/cf15311d-2a9f-41e3-89fe-f6aa9631d402.webp?w=1600&h=840&fit=crop&crop=entropy&auto=compress,format&format=webp)